It’s late July or early January. The academic calendar, a document of serene certainty in a chaotic world, tells you the semester is approaching. And so, the ritual begins: “Syllabus Week.”

You open last semester’s or last year’s document. You update the dates, tweak the reading list, and then you freeze. You’ve arrived at the section on Academic Integrity. A year ago, this was boilerplate. A simple, clear statement you hadn’t touched in years. Now, it feels like a minefield. The elephant in every classroom – Artificial Intelligence – is staring you down, and it demands a response.

What do you write?

This single question has launched a thousand panicked emails, department meetings, and frantic searches for a quick fix. The result has been a wave of AI syllabus policies that are, for the most part, pedagogically bankrupt. They are documents born of fear, not foresight.

Before we build a better one, let’s dissect the three deadly sins of AI syllabus design that have become rampant across higher education.

The Three Deadly Sins of AI Syllabus Policies

1. The Total Ban: The Futility of Prohibition

The most common gut reaction is to simply forbid it all. “The use of any generative AI tools for any assignment in this course is strictly prohibited and will be treated as plagiarism.”

It feels clear. It feels decisive. It is also a complete fantasy.

Banning AI is like banning the calculator in a math class or the internet in a research methods course. You aren’t preventing its use; you are merely driving it underground and turning honest students into liars. The tools are ubiquitous, embedded in the very software students use to write (see: Microsoft Word, Google Docs), and the detection tools are notoriously unreliable, prone to false positives that can ruin a student’s career.

Worse, the total ban is a pedagogical surrender. It teaches students nothing except that their professors are either afraid of the future or fundamentally out of touch with the reality of modern knowledge work. You are preparing them for a world that no longer exists.

2. The Vague Warning: The Cowardice of Ambiguity

The second deadly sin is the vague, CYA statement. It’s the paragraph that tries to sound serious without committing to anything specific.

“Students are expected to submit their own original work. Be advised that the misuse of AI tools may constitute a violation of the university’s academic integrity policy.”

This is not a policy; it’s a shrug. It outsources the real work of defining “misuse” to some other office and leaves students in a state of anxious confusion. Can they use AI to brainstorm? To check their grammar? To explain a concept they don’t understand? The Vague Warning provides no answers, creating a climate of fear where students are afraid to ask, lest they incriminate themselves. This ambiguity is a breeding ground for accidental cheating and erodes the trust essential for a healthy learning environment.

3. The AI-Generated Policy: The Irony of the Quick Fix

The most ironic sin of all is the professor who, in a rush, asks ChatGPT: “Write me an AI policy for my syllabus.” The AI dutifully spits out a generic, sterile, and utterly soulless paragraph that the professor copies and pastes without a second thought.

This approach abdicates the single most important role of a professor: to be the intellectual and ethical architect of their own course. A syllabus is not a terms-of-service agreement; it’s a teaching document. It’s your first and best chance to tell your students what your course is about, what you value, and how you think. Outsourcing that core task to the very machine you are trying to regulate is a profound act of intellectual negligence.

Your Syllabus Is a Constitution, Not a Contract

So, what’s the alternative? It starts with a philosophical shift. Stop thinking of your syllabus as a legalistic contract designed to catch cheaters. Start thinking of it as a course constitution: a document that lays out the rights, responsibilities, and guiding principles of your classroom community.

A good constitution doesn’t just list rules; it explains the why behind them. It sets a tone. It establishes a culture. Your AI policy is your chance to do exactly that—to model the kind of critical, ethical, and transparent thinking you want your students to emulate.

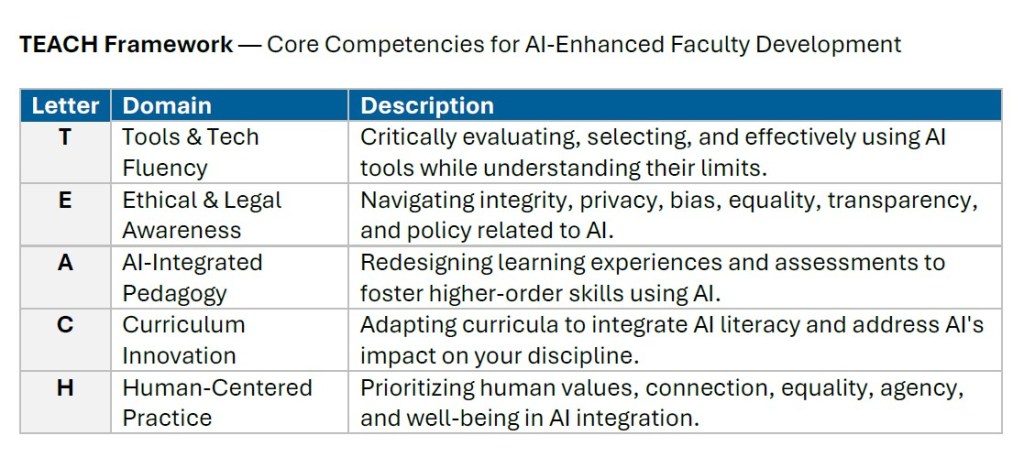

Using the TEACH Framework, we can build a syllabus policy that is more than a rule—it’s a robust, multi-part teaching manifesto.

Building a Better AI Policy: A Step-by-Step Guide

Instead of one monolithic paragraph, consider breaking your AI policy into several parts, each addressing a different pedagogical goal.

Part 1: The Guiding Philosophy (Human-Centered Practice)

Start with a brief, human-centered statement about the goals of the course. This sets the stage and frames the policy in terms of learning, not just rules.

Sample Text: “This course is designed to help you develop your unique voice and critical thinking skills as a writer [or historian, scientist, artist, etc.]. Our goal is not just to produce polished work, but to engage in the messy, challenging, and rewarding process of learning. The following policies are designed to support that primary goal in an age of powerful AI tools.”

Part 2: The Tool-Use Policy (Tools & Tech Fluency)

Be explicit about what is allowed, what is encouraged, and what is forbidden. A tiered approach often works best.

Sample Text: “To provide clarity, our course will use a three-tiered approach to AI use. Each assignment will be clearly labeled as one of the following:

- AI-Free Zone: These assignments are designed to assess your unassisted thinking and skills. The use of generative AI for any part of the process (brainstorming, drafting, editing) is not permitted. Example: In-class reflections, final exams.

- AI as Collaborator: On these assignments, you are encouraged to use AI tools as a partner to brainstorm, get feedback, or improve your work. You must cite your use of AI and include a brief reflection on how it assisted you. Example: Research proposals, low-stakes drafts.

- AI as a Subject of Inquiry: These assignments require you to directly engage with and critique AI tools. Example: Analyzing AI-generated text for bias, reverse-engineering prompts.“

Part 3: The Ethical Use Pledge (Ethical & Legal Awareness)

Instead of just stating rules, ask students to commit to principles. This reframes integrity as a shared community value.

Sample Text: “By remaining in this course, you agree to the following ethical principles of AI use:

- I will be honest. I will always be transparent about when, why, and how I am using AI tools in my work.

- I will be accountable. I understand that I am fully responsible for the final submission. I will fact-check AI outputs and correct any errors, biases, or fabrications.

- I will be mindful. I will critically reflect on how these tools are shaping my own learning and thinking, striving to use them to deepen my understanding, not to bypass it.”

Part 4: The ‘Why’ Behind the ‘What’ (AI-Integrated Pedagogy)

Briefly explain the pedagogical reasoning behind your policies. This shows respect for your students as partners in learning.

Sample Text: “You might wonder why some assignments are AI-Free while others encourage AI use. This is not arbitrary. The goal of this course is to build a ‘portfolio of intelligences.’ Sometimes, we will work on your unassisted analytical skills. At other times, we will work on your ability to collaborate effectively with digital tools. Both are essential skills for your future, and our assignment design reflects this.”

Part 5: Connecting to the Future (Curriculum Innovation)

Frame your policy in the context of your discipline and your students’ future careers.

Sample Text: “The skills we are building in this course—critical analysis, ethical reasoning, and effective human-machine collaboration—are precisely what the field of [Your Discipline] demands. Our approach to AI is designed not just for this class, but as preparation for the professional world you are about to enter.”

The Syllabus Is Your First Move

Now, you likely don’t want to write all five parts together in detail (or maybe you do), but that could end up an entire page of AI policy. So, obviously have to be intentional and crafty. But the TEACH framework and these examples provide a lens into crafting an effective AI syllabus policy.

And putting these pieces together creates a syllabus that is more than a list of dos and don’ts. It’s a thoughtful, coherent, and empowering document. It transforms a moment of administrative anxiety into an opportunity for profound teaching.

It tells your students that you are not afraid of the future. It shows them that you have thought deeply about your craft and that you respect them enough to explain the ‘why.’ It establishes you as a credible, forward-thinking guide who can help them navigate the complex new world they inhabit.

This fall, don’t settle for a policy of fear, ambiguity, or irony. Write a teaching manifesto.

Ready to build your syllabus manifesto?

Enroll in the Teaching with AI Certificate (FAST Track). Policy design is one of the practical modules, alongside assignment redesign, curriculum integration, and human-centered pedagogy. It’s the structured path for moving from awareness to applied fluency.

Explore the FAFI Faculty AI Fluency Index to benchmark your department or campus readiness. Building strong syllabus policies is only the start—FAFI helps you move from awareness to institution-wide fluency.