Another email from the Provost’s office or some well-meaning soul in central administration. Subject: “Updated Guidance on Responsible AI Use.” How many does that make this year?

You open it with a sigh. It’s three pages long, written in a dialect of corporate-speak that only exists in university administration (or drafted from GenAI, as though we can’t tell they were using it). It’s a masterclass in saying nothing, filled with toothless platitudes about “academic integrity,” vague suggestions to “innovate responsibly,” and ominous warnings about “unauthorized use.” The document’s primary function is clear: to absolve the university of liability, not to empower educators.

It’s everything and nothing. It’s a document written by a committee to protect an institution, not to enlighten a single person standing in front of a classroom.

This is the state of AI in higher ed. While universities are busy forming task forces and issuing memos, faculty are on the front lines of a pedagogical revolution with no map, no compass, and certainly no useful air support. The gap between the view from the central office and the reality on the ground has never been wider.

The Two Students in Your Classroom

The memos from on high talk about AI as a single, monolithic threat to be contained. But you know the truth is far more complex. In your classroom right now, there are two dramas playing out.

Student A is “getting by.” They use ChatGPT the way a college student a decade ago used Wikipedia for a last-minute paper: as a fast-food substitute for real intellectual work. They prompt it for a five-paragraph essay, tweak a few sentences, and submit it. They aren’t learning; they are outsourcing. They are a problem of compliance, and the university’s memos are obsessed with them.

Student B is “getting smart.” They use an AI to brainstorm counterarguments to their own thesis, forcing them to sharpen their logic. They use it as a 24/7 Socratic tutor to explain a complex statistical concept in five different ways until it finally clicks. They feed it their own clumsy draft of a paragraph and ask it to “act as a ruthless editor and improve the clarity.” They are using AI to augment their intellect, and they represent the entire future of knowledge work.

The tragedy is that our current top-down, fear-based university strategies are so focused on catching Student A that they are completely stifling the potential of Student B. The administrative response, obsessed with plumbing and policy, is failing both students and faculty alike. They are trying to build a better horsewhip in the age of the automobile.

We don’t need another policy memo. We need a pedagogical framework. We need a way to think, experiment, and lead.

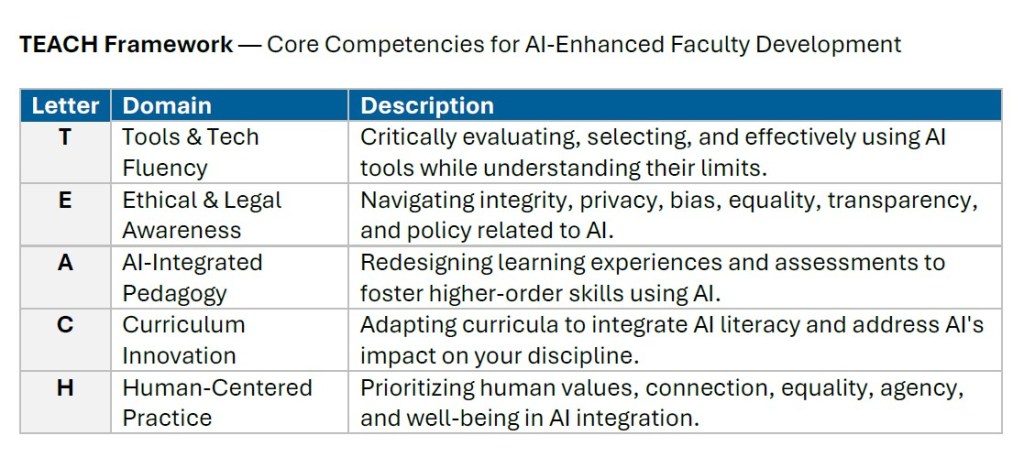

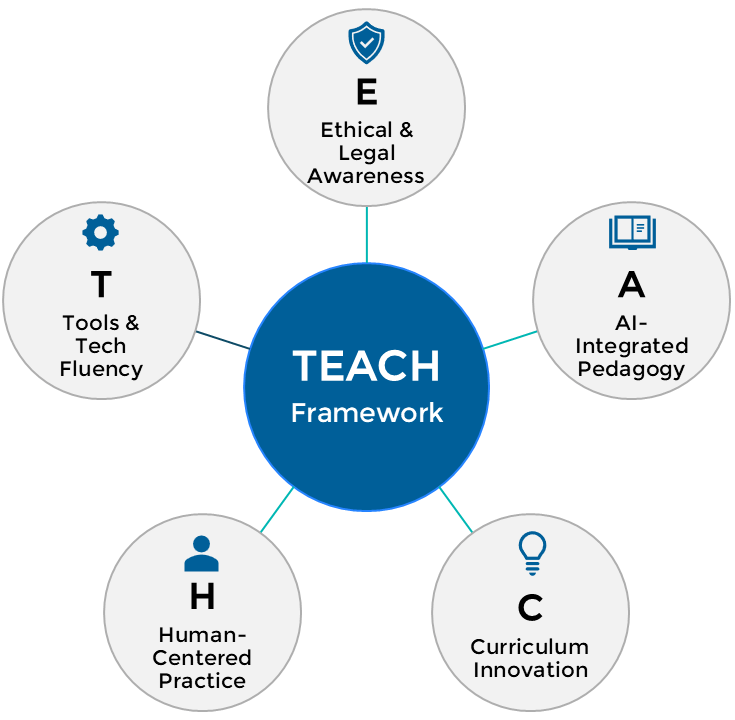

That’s why I built the TEACH Framework.

A Compass for the Overwhelmed: Inside the TEACH Framework

TEACH is not another top-down mandate. It’s a faculty-first framework for making strategic, defensible decisions about AI in your classroom and your career. It’s a compass for the intellectually serious educator navigating the fog.

TEACH is a recognition that teaching with AI is not just one thing. Instead, it’s five distinct domains of practice that each require our attention.

T – Tools & Tech Fluency

The first, most basic question is about the tech itself. But fluency isn’t just knowing what ChatGPT is (and please notice, I’m using AI fluency here and not AI literacy for a reason). It’s moving beyond the binary question of “Do we ban it or allow it?” to a far more sophisticated set of professional judgments.

It means understanding that the “AI” landscape is not a monolith. Perplexity.ai excels at research and synthesis with cited sources. Claude is known for its nuanced handling of long texts. Midjourney creates images. Google & OpenAI come out with something new every two quarters. A university policy that treats all of these as the same tool is demonstrating its own incompetence.

Fluency for a faculty member means asking:

- Which tool is right for which specific learning objective?

- At what stage of a project should students use AI? As a brainstormer at the beginning? An editor in the middle? A fact-checker at the end?

- What are the inherent limitations and biases of the specific tool we are using? How can I teach my students to be critical consumers of its output?

Answering these questions requires real engagement, not a blanket policy. It requires us to develop a professional, evidence-based opinion on these tools, just as we would for a new textbook or a piece of lab equipment.

E – Ethical & Legal Awareness

TThe administrative conversation about ethics begins and ends with plagiarism. This is a profound failure of imagination in our new age of GenAI. For faculty and students, the ethical landscape is far richer and more treacherous.

Focusing only on cheating is like teaching driver’s ed by only talking about speeding tickets. Yes, it’s important, but it ignores the rest of the skills needed to be a good driver.

A robust ethical conversation in the classroom, guided by the TEACH framework, includes:

- Data Privacy & Sovereignty: When a student pastes their essay into a free AI tool, where does that data go? Who owns it? Are we, by requiring the use of these tools, forcing our students into a bargain with Silicon Valley?

- Algorithmic Bias: If we use an AI to help grade papers, what biases are baked into its algorithm? Does it favor certain writing styles? Does it penalize non-native English speakers? How can we interrogate these biases?

- Intellectual Property: Who owns an AI-generated image? If a student uses an AI to write code for a class project, have they actually demonstrated mastery?

There are lots of ethical AI issues, and they aren’t sideline issues. They are central to preparing students for the world they are about to enter. Ignoring them is a dereliction of our duty.

A – AI-Integrated Pedagogy

This is the engine room. It’s where we stop hand-wringing and start redesigning. The old 10-page research paper on the causes of the French Revolution is dead. Dead, really? Yep, dead, dead, dead. A student can now generate a B+ version in the time it takes to brew a cup of coffee (or quicker, actually). Fretting about that is a waste of energy. The pedagogical move is to re-architect the assignment completely.

For example, the new assignment becomes a process-driven analysis (think of those days when you had to show all your work when learning algebra):

- Step 1: Use ChatGPT (or Gemini or fill in the blank) to generate a timeline and summary of the key economic and social factors leading to the French Revolution. Submit this raw output.

- Step 2: Now, use a different tool, like Perplexity.ai or Claude, to generate a counter-argument focusing on the influence of Enlightenment philosophers. Submit that raw output.

- Step 3: Your final paper is not a summary. It is a 5-page critique of the two AI outputs. Where did they excel? Where were their hidden biases (e.g., over-emphasizing economic determinism)? What crucial nuance or connection did they both miss? Your grade is based on the quality of your critique and the originality of the synthesis you provide.

With this one shift, everything changes. The assignment is no longer about producing a standardized artifact. It’s about a student’s process, their critical engagement, and their metacognitive reflection. You’ve made the AI the subject of analysis, not the tool for cheating. You have, in short, AI-proofed your assignment by running directly into the fire.

C – Curriculum Innovation

This is the domain that university leaders should be losing sleep over, but most aren’t even discussing or making a dent in it (sigh!). While faculty are focused on their individual courses, AI is posing an existential threat to entire degree programs.

The TEACH framework pushes us to zoom out and ask brutal, necessary questions about our curriculum’s value proposition:

- If an AI can now perform a SWOT analysis, write marketing copy, and analyze a balance sheet, and perform so many core business functions, what is the core value of a business degree?

- If an AI can write clean, functional code in seconds, how should our computer science curriculum shift from teaching syntax to teaching systems architecture and creative problem-solving?

- If a student can learn calculus or a foreign language faster with an AI tutor than in a traditional lecture, how do we justify our current course structure?

These are multi-million-dollar questions for the university. A faculty-led strategy, using the Curriculum domain of TEACH, turns this threat into an opportunity for renewal—a chance to strip our programs down to their essential, durable, human skills and rebuild them with a clear-eyed view of the future.

H – Human-Centered Practice

After exploring technology, ethics, pedagogy, and curriculum, we arrive at the most important domain: the human one. For every task the AI can now do, we must ask: What can it not do as well as human being and/or what should it do only with a human-in-the-loop?

It cannot mentor a struggling student as well as a human. It cannot spark a lifelong passion during office hours the same way a faculty can. It cannot build a community of trust and inquiry in a classroom the same way an educator can. It cannot look a student in the eye and tell them, “You have what it takes,” with a real human, emotional connection.

This is our ultimate source of leverage. The more AI automates and simulates the mechanical aspects of education, the more valuable the human elements become. A human-centered practice means deliberately identifying and amplifying these irreplaceable interactions. It’s a conscious decision to focus our time and energy on modeling critical thinking, fostering intellectual courage, and providing the personalized guidance that turns a capable student into an exceptional graduate.

The Choice: A Framework for Renewal, Not a Script for Compliance

The administrative response to AI has been to treat faculty like cogs who need scripts. But we are not cogs. We are architects of learning environments.

The TEACH Framework is designed for architects. It respects faculty autonomy. It doesn’t tell you what to do; it gives you a robust model for figuring out what’s right for you, your discipline, and your students. It enables guided autonomy, fostering coherence across an institution without crushing the contextual innovation that is desperately needed.

The path forward isn’t in a memo. It needs to be much, much deeper and far, far more sustained. It’s in deliberate, informed, pedagogical action. It’s time to stop reacting and start leading.

Ready to move past the memos?

1. Download the free TEACH Starter Toolkit by joining the AI Teaching & Leadership Brief. It includes a full overview of the framework and a self-assessment to find your starting point. No jargon, just a practical tool for you and your colleagues. (Here’s the link)

2. Upgrade to the Core Toolkit for our complete set of implementation guides, editable templates, and slide decks to help you take action—or lead a departmental conversation this week.

The future of higher education won’t be determined by AI, but by how we teach with it.